Why Predictive Maintenance Is Now a Supply Chain Strategy

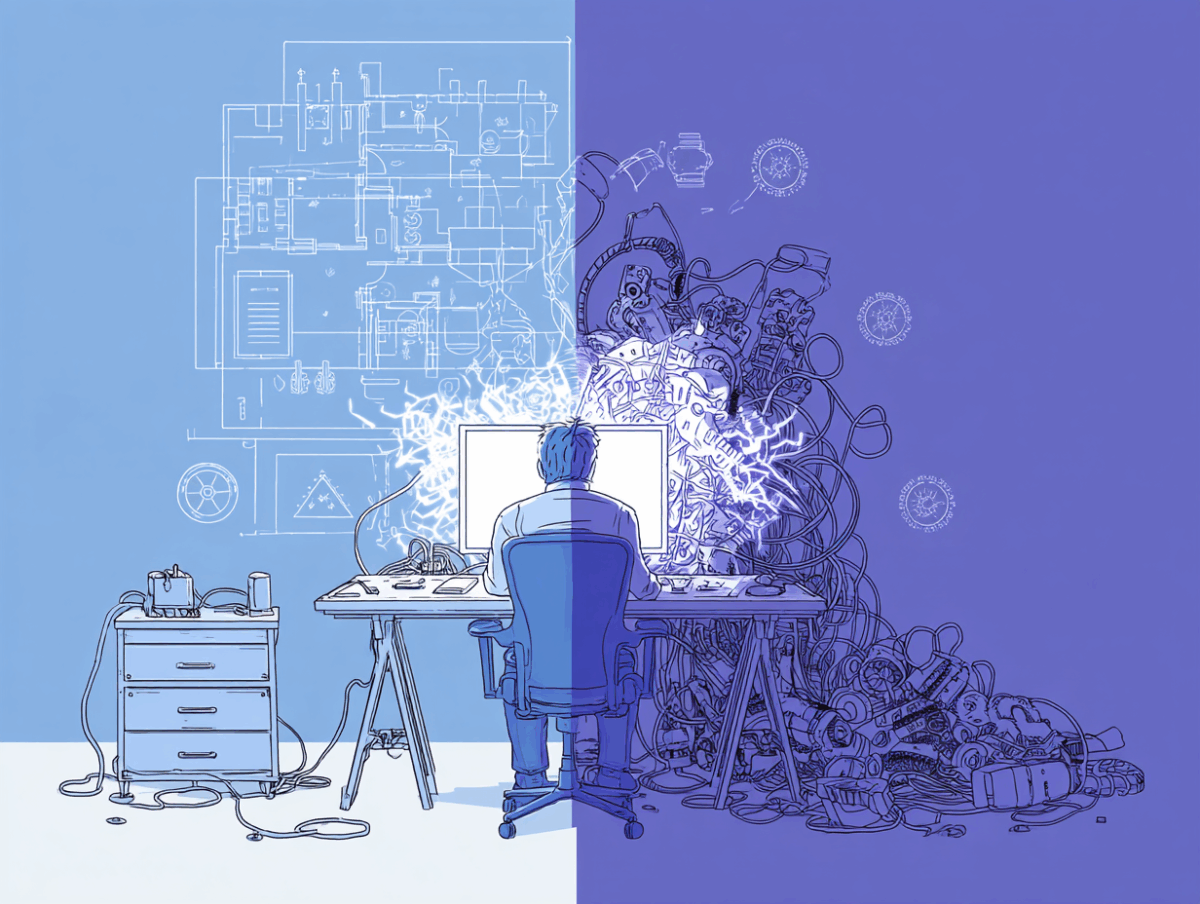

For decades, maintenance and supply chain operated on parallel tracks—close enough to impact one another, but rarely integrated in any meaningful way. Today, that separation is no longer viable. In an environment defined by volatility, thin labor markets, aging assets, compressed planning cycles, and rising customer expectations, unplanned downtime has become one of the most significant sources of supply chain instability.

Predictive maintenance (PdM) is not simply a new engineering tool. It has become a foundational supply chain capability—essential for achieving stable flow, reliable delivery, and competitive cost-to-serve. Companies that treat PdM as an operations side project will underperform. Companies that elevate it to a network-wide strategy will create meaningful and durable advantage.

The Shift: Modern Supply Chains Are Flow Systems, Not Cost Centers

The biggest change in the last five years is that supply chains have evolved from back-office cost centers to strategic flow systems. Leadership teams now optimize for continuity, resilience, and customer fulfillment—not just unit cost.

This shift was accelerated by three forces:

- Global volatility and geopolitical risk created unpredictable lead times.

- North American manufacturing capacity expansion increased the pressure on aging equipment.

- Demand variability shortened planning horizons, making flow stability more valuable than efficiency alone.

In this environment, any disruption—especially on a critical production asset—multiplies operational risk downstream. A single hour of downtime does not translate to one hour of lost production. It cascades through the entire network: missed sequencing windows, inaccurate schedules, premium freight, labor inefficiencies, and days of recovery.

This “flow disruption multiplier” places asset reliability squarely inside the supply chain strategy domain.

The Hidden Link Between Maintenance and Supply Chain Performance

Maintenance has traditionally been measured on technical KPIs—MTBF, MTTR, work-order closure. Important, but incomplete. The real business impact shows up in supply chain metrics:

- Lead-time reliability

- Throughput consistency

- Schedule adherence

- Inventory accuracy

- Customer fill rates

- OTIF performance

Unplanned downtime is one of the largest root causes of supply chain instability, yet most organizations treat it as an internal operations problem rather than a network-wide performance risk.

Aging Assets Magnify the Problem

North American factories often run equipment that is 20–40 years old. Spare parts availability is inconsistent. Tribal knowledge is disappearing. Skilled technicians are retiring faster than they can be replaced.

In this environment, relying on reactive or preventive maintenance creates unacceptable variability. The supply chain experiences the consequences long before leadership recognizes the root cause.

The Cost of Lost Flow Is Larger Than Most Leaders Expect

Downtime triggers:

- Emergency procurement

- Overtime and rebalancing

- Excess buffer inventory

- Premium freight

- Customer delivery misses

- Lower asset utilization and higher cost-to-serve

Predictive maintenance minimizes these cascading impacts by identifying failures before they disrupt flow, giving planners time to adjust and logisticians time to optimize.

Why Predictive Maintenance Has Become a Supply Chain Capability

Predictive maintenance uses sensing, telemetry, and historical data to forecast failures before they occur. What once required highly specialized vibration analysts or full-time condition monitoring teams can now be done at scale using AI/ML models.

But the shift is not only technical—it is strategic.

Predictability Improves Every Downstream Metric

When you can anticipate when an asset will degrade or fail, you improve:

- Schedule accuracy

- Production planning stability

- Logistics slotting and carrier planning

- Inventory precision

- Workforce allocation

- Customer commitments

Predictive maintenance produces early-warning signals that integrate directly with planning systems (MRP, MES, APS, S&OP). That integration is what transforms it into a supply chain capability.

Real-Time Visibility Across the Network

PdM enables a connected ecosystem in which maintenance, operations, planning, procurement, and logistics share one source of truth about asset health.

This streamlines decision-making:

- Planners see expected downtime windows weeks ahead.

- Procurement knows which parts will be required and when.

- Logistics can re-slot deliveries, labor, and outbound shipments.

- Customer teams can adjust promise dates with high confidence.

Resilience becomes a managed process—not a reaction.

Use Cases: How Predictive Maintenance Strengthens the End-to-End Value Chain

1. Production Planning Integration

PdM allows planners to adjust production schedules proactively rather than react to breakdowns. This results in higher adherence to plan, fewer changeovers, and better labor utilization.

2. Supplier and Component Synchronization

Forecasting failure modes helps procurement secure the right components ahead of time. This reduces parts stockouts and eliminates emergency sourcing.

3. Logistics and Transportation Optimization

Stable production generates stable outbound flows. Carriers are scheduled more efficiently. Premium freight drops. Warehouses operate with fewer surprises.

4. Inventory Optimization

With fewer disruptions, companies can reduce safety stock without increasing risk. Excess working capital tied up in inventory can be redeployed elsewhere.

5. Workforce Enablement

Technicians work with diagnostic insights, not guesswork. Less firefighting. More planned work. Higher productivity and safer operations.

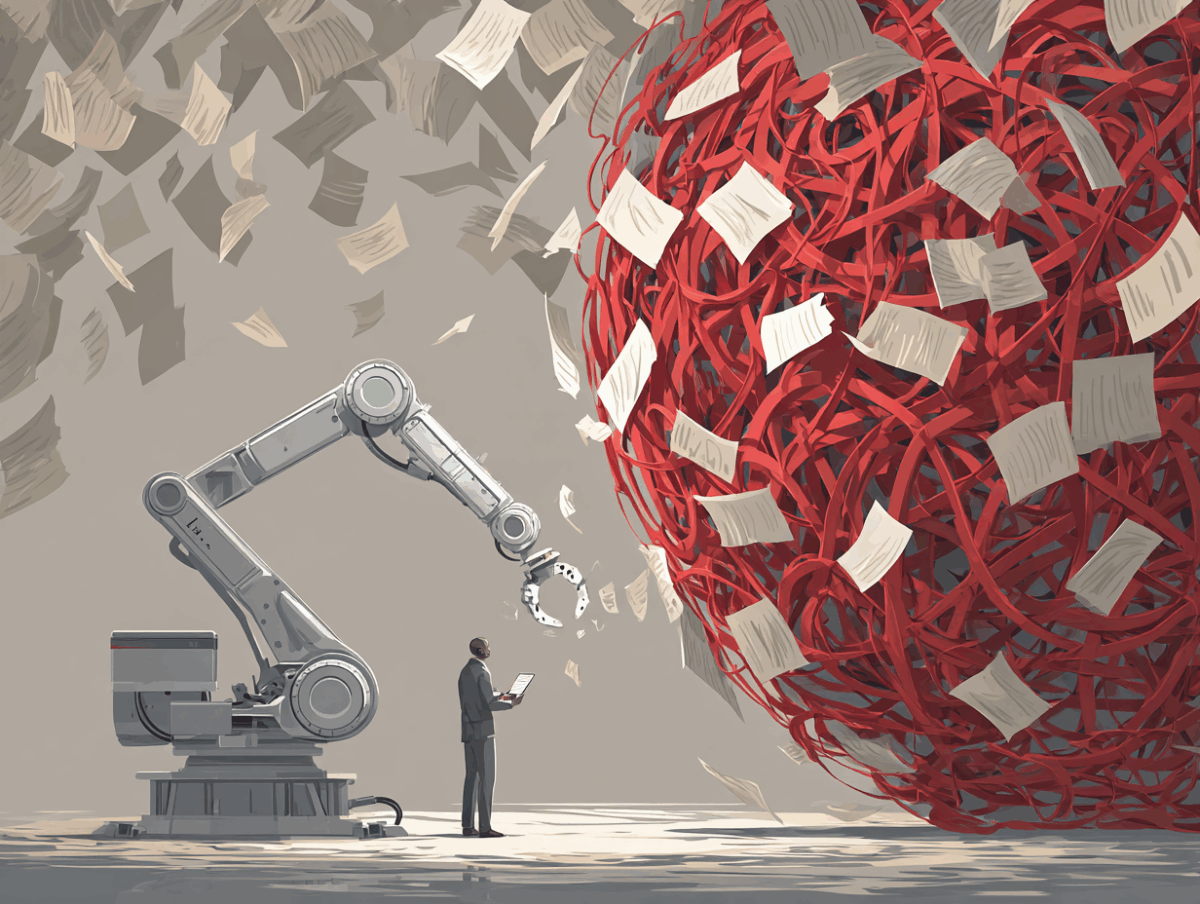

Why Many Predictive Maintenance Initiatives Fail

Despite the value, most PdM programs stall or underperform because they are approached as engineering projects rather than supply chain programs.

The most common failure points:

- Fragmented data across PLCs, SCADA, CMMS, and historians

- Sensors deployed without a clear operating model

- No standard governance or maintenance maturity baseline

- Lack of integration with planning and logistics systems

- Weak business cases that do not link to supply chain KPIs

- Under-resourcing of change management and frontline adoption

Predictive maintenance requires a unified strategy—technology is only one component.

What “Good” Looks Like

High-performing organizations adopt a scalable operating model:

- Unified data architecture across plants and systems

- Asset-criticality ranking tied to supply chain risk

- Standardized maintenance playbooks across sites

- Cross-functional governance

- Real-time dashboards connecting asset health and flow stability

- Embedded alerts into S&OP, MRP, and scheduling systems

This approach ensures that every early-warning signal leads to an operational decision.

The Strategic Payoff

When predictive maintenance becomes a supply chain capability, performance strengthens across the board:

- Higher uptime → higher throughput

- More stable schedules → lower logistics cost

- Better asset predictability → more accurate inventory and customer commitments

- Less disruption → lower cost-to-serve and higher margin

- Stronger resilience → competitive differentiation

In a world where volatility is the new normal, predictive maintenance is no longer optional. It is a core pillar of modern supply chain design.